In today’s blog we will talk about ChatGPT, the new worldwide sensation which has taken the world of technology by storm. Everyone is talking about it so let’s see what makes ChatGPT so special.

What is ChatGPT ?

ChatGPT is an artificial intelligence program developed by a company called OpenAI. In 2015, Elon Musk, Sam Altman, Greg Brockman, Ilya Sutskever and Wojciech Zaremba founded OpenAI, an artificial intelligence research organization. OpenAI has other programs, but ChatGPT was introduced in 2018.

ChatGPT is based on GPT-3, the third model of the natural language processing project. The technology is a pre-trained, large-scale language model that uses GPT-3 architecture to sift through an immense pool of internet data and sources to reference as its knowledge base.

This AI is a well of knowledge, but its ability to communicate is what sets it apart from other technologies.

It has been fine-tuned for several language generation tasks, including language translation, summarization, text completion, question-answering and even human diction.

ChatGPT is a transformer-based neural network that provides answers and data with human writing patterns. The AI has been programmed with endless amounts of text data to understand context, relevancy and how to generate human-like responses to questions.

Other fast facts about ChatGPT include:

- ChatGPT is large-scale. It has over 175 billion parameters, making it one of the largest language models ever.

- ChatGPT is pre-trained. The program has a “set it and forget it” quality, meaning all the legwork to make it function has already happened.

- ChatGPT is capable of multitasking. The program has more than one language function, so it can simultaneously juggle translation, summarization and answering questions.

- ChatGPT responds in real time. Like a chatbot you’d find while online shopping, ChatGPT responds very quickly after you ask it a question or complete a task.

How does ChatGPT work?

ChatGPT uses a vast neural network to produce the human-like language through which it communicates. But how does that process happen?

Take a look below for a step-by-step breakdown of the process:

- Input processing: The human user types commands or questions into ChatGPT’s text bar.

- Tokenization: The text inputted is tokenized, meaning the program divides it into individual words to be analyzed.

- Input embedding: The tokenized text is put into the neural network’s transformer portion.

- Encoder-decoder attention: The transformer encodes the text input and generates a probability distribution for all possible outputs. Then that distribution generates the output.

- Text generation and output: ChatGPT generates its output answer, and the human user receives a text response.

What are ChatGPT’s capabilities?

ChatGPT has extensive capabilities that will likely change the landscape of many industries.

The artificial intelligence program can complete tasks like:

- Text generation.

- Text completion.

- Question-answering.

- Summarization.

- Text translation.

- Conversational AI.

- Sentiment analysis.

- Named entity recognition.

- Part-of-speech tagging.

What are some limitations of ChatGPT?

Although ChatGPT is one of the most advanced AI NLPs, it has its limitations.

-Bias

ChatGPT might have biases embedded in its training data, like any other machine learning model. This can be biased, including gender bias, racial bias and ageism.

– Data privacy

With any technology, there can be privacy concerns that come with it. ChatGPT was built off of massive amounts of data input, which means that anyone who contributed to the data training process is possibly vulnerable, as that data is now forever stored and could also be used.

– Misinformation

While ChatGPT is incredibly intelligent, its database does include the Internet — and not everything online is true. Therefore, there is no unwavering guarantee of information accuracy when using ChatGPT.

– Language understanding

Again, ChatGPT is highly intelligent but can still struggle to understand certain words, sentences and questions, which can lead to off-topic responses.

– Lack of common sense and personability

No matter how smart artificial intelligence is, common sense and personality are human qualities. And while ChatGPT is trained in sentiment, there are still limitations to certain human experiences, goals and understandings.

Is ChatGPT a threat to developers ?

Early adopters of ChatGPT have used it to write Python code, as well as to reverse engineer shellcode and rewrite it in C. ChatGPT has sparked hope among people eager for the arrival of practical applications of AI, but it also begs the question of whether it will displace writers and developers in the same way robots and computers have replaced some cashiers, assembly-line workers and, perhaps in the future, taxi drivers.

It’s hard to say how sophisticated the AI text-creation capabilities will be in the future as the technology ingests more and more examples of our online writing. But I see it having very limited capabilities for programming. If anything, it could end up being just another tool in the developer’s kit to handle tasks that don’t take the critical thinking skills software engineers bring to the table.

ChatGPT has impressed a lot of people because it does a good job of simulating human conversation and sounding knowledgeable. It uses algorithms to analyze the text and humans fine-tune the training of the system to respond to user questions with full sentences that sound like they were written by a human but ChatGPT has flaws—and the same limitations that hamper its use for writing content also render it unreliable for creating code. Because it’s based on data, not human intelligence, its sentences can sound coherent but fail to provide critically-informed responses. It also repurposes offensive content like hate speech. Answers may sound reasonable but can be inaccurate.

OpenAI’s website provides an example of using ChatGPT to help debug code. The responses are generated from prior code and lack the capability to replicate human-based QA, which means it can generate code that has errors and bugs. OpenAI acknowledged that ChatGPT “sometimes writes plausible-sounding but incorrect or nonsensical answers.” This is why it should not be used directly in the production of any programs.

The lack of reliability is already creating problems for the developer community. Stack Overflow, a question-and-answer website coders use to write and troubleshoot code, temporarily banned its use, saying there was such a huge volume of responses generated by ChatGPT that it couldn’t keep up with quality control, which is done by humans. “Overall, because the average rate of getting correct answers from ChatGPT is too low, the posting of answers created by ChatGPT is substantially harmful to the site and to users who are asking or looking for correct answers.”

Coding errors aside, because ChatGPT—like all machine learning tools—is trained on data that suits its outcome (in this case, a textual nature), it lacks the ability to understand the human context of computing to do programming well. Software engineers need to understand the intended purpose of the software they’re creating and the people who will be using it. Good software can’t be built by cobbling together programs with regurgitated code.

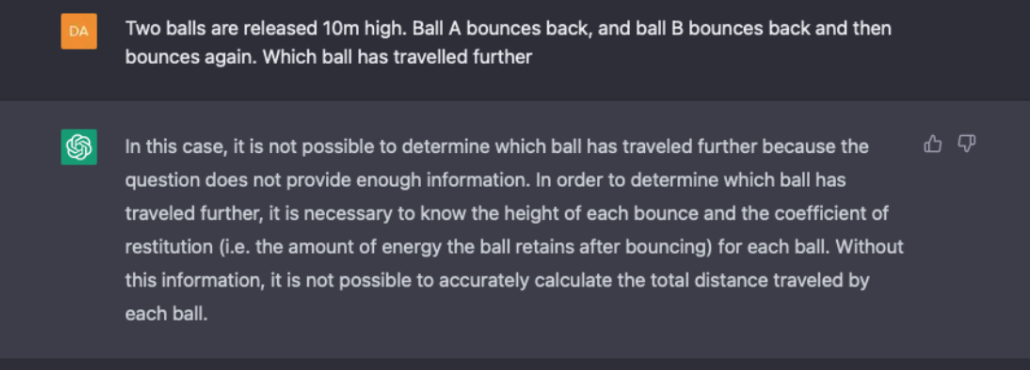

For example, ChatGPT cannot understand the ambiguity in simple requirements as shown below:

Although it is clear that if one ball just bounces and comes back but another bounces and then bounces again, the second ball has traveled further, ChatGPT struggles with this nuance; that nuance will be needed if these systems are ever to take over from developers.

Pre-trained AI code generation also raises some legal questions with regard to intellectual property rights; it cannot currently distinguish between code that is licensed in a restrictive or open fashion. This could expose people to licensing compliance risk if the AI borrows a prewritten line of code from a copyrighted repository. The problem has already prompted a class action lawsuit against a different OpenAI-based product called GitHub Copilot.

We need humans to create the software people rely on, but that’s not to say there couldn’t be a place for AI in software development. Just like automation is being used by security operations centers for scanning, monitoring and basic incident response, AI could serve as a programming tool for handling lower-level tasks.

Machine learning systems are becoming increasingly advanced each day; however, they cannot think like the human brain does. This has been the case for the past 40+ years of study into artificial intelligence. While these systems can recognize patterns and increase productivity for simple tasks, they may not always produce code as well as humans. In the meantime, machine learning can help with simple programming problems in the future, allowing developers of tomorrow to think of more complex issues.

At this point, ChatGPT won’t be disrupting any field of technology, especially not software engineering. Concern about robots displacing programmers is vastly overstated. There will always be tasks that developers with human cognition can do that machines will never be capable of.